We describe in detail each of the different measures produced by the toolkit, discuss their applications and considerations relevant to their use, and perform example analyses using real behavioral data collected from sub-acute stroke patients. The toolkit also estimates lesion-induced increases in the lengths of the shortest structural paths between parcel pairs, which provide information about changes in higher-order structural network topology. The Lesion Quantification Toolkit uses atlas-based approaches to estimate parcel-level grey matter lesion loads and multiple measures of white matter disconnection severity that include tract-level disconnection measures, voxel-wise disconnection maps, and parcel-wise disconnection matrices.

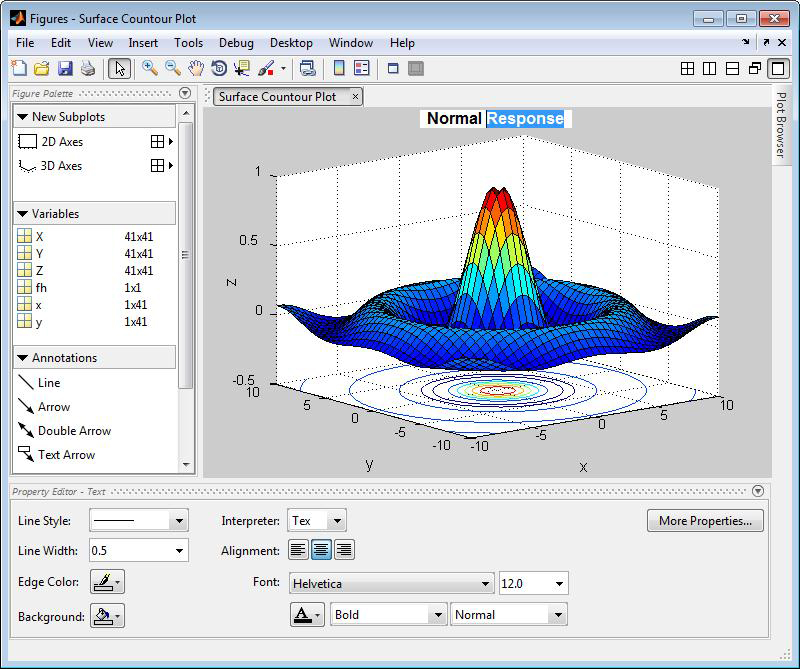

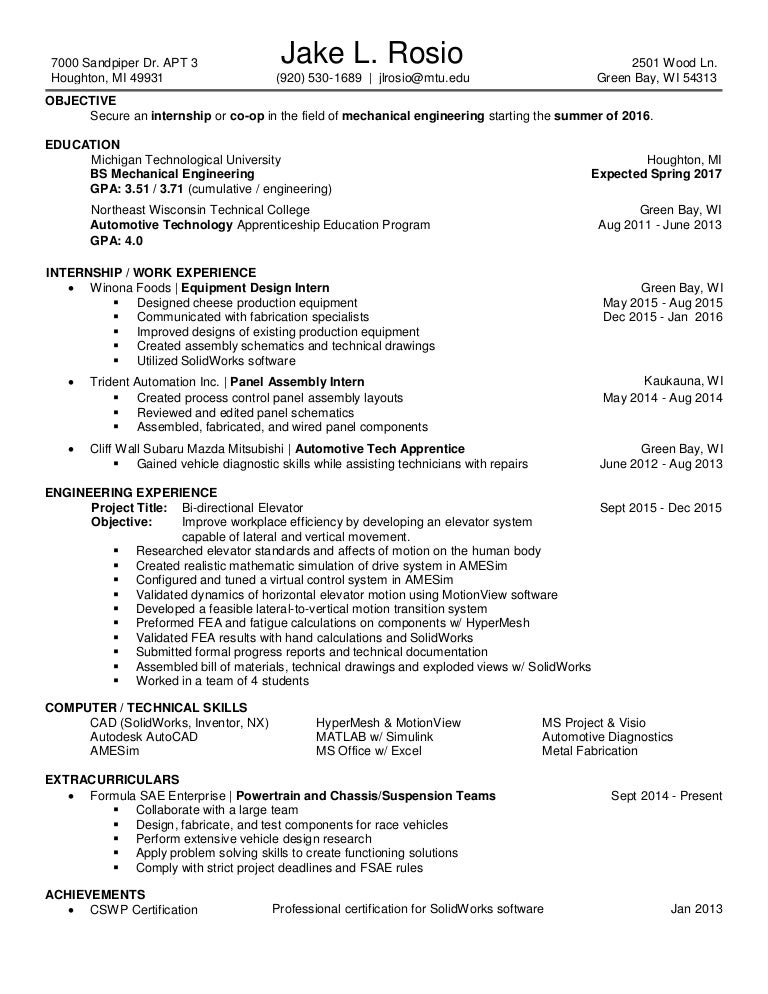

MATLAB 2012 VS 2016 SOFTWARE

Here, we present the Lesion Quantification Toolkit, a publicly available MATLAB software package for quantifying the structural impacts of focal brain lesions. While researchers in the fields of neuroscience and neurology are therefore increasingly interested in quantifying the effects of focal brain lesions on the white matter connections that form the brain’s structural connectome, few dedicated tools exist to facilitate this endeavor. However, while brain lesion studies have traditionally aimed to localize neurological symptoms to specific anatomical loci, a growing body of evidence indicates that neurological diseases such as stroke are best conceptualized as brain network disorders.

MATLAB 2012 VS 2016 CODE

Of course, if the code has any features which make its run time non-deterministic then it's a different matter.Lesion studies are an important tool for cognitive neuroscientists and neurologists.

If I run a program 5 times and one (or two) of the results is wildly different from the mean I'll re-run it. In my experience it's not necessary to run programs for minutes to get data on average run time with acceptably low variance. And if the code naturally takes 100s of seconds or longer, either spend longer on the testing or try it with artificially small input data to run more quickly. If the piece of code takes less than, say 10s, then repeat it as many times as necessary to bring it into the range, being careful to avoid any impact of one iteration on the next. Furthermore, we take care to ensure that the total time is of the order of 10s of seconds (rather than 1s of seconds or 100s of seconds) and repeat it 3 - 5 times and take some measure of central tendency (such as the mean) and draw our conclusions from that.

I think that I am right to state that many of us time Matlab by wrapping the block of code we're interested in between tic and toc. > toc % End the timer and display elapsed timeĪlso if you want multiple timers, you can assign them to variables: > mytimer = tic įinally, if you want to store the elapsed time instead of display it: > myresult = toc If it's really simple stuff you're testing, you can also just time it using tic and toc: > tic % Start the timer

Obviously if your function is very quick, you might find you don't get reliable results so if you can run it many times or extend the computation that would improve matters. Viewer also clears the current profile data for next time.īear in mind, profile does tend to slow execution a bit, but I believe it does so in a uniform way across everything. > profile viewer % Opens the viewer showing you how much time everything took > myfunctiontorun( ) % This can be a function, script or block of code You can use the profiler to assess how much time your functions, and the blocks of code within them, are taking.

0 kommentar(er)

0 kommentar(er)